RYOULIVE.AI

NEWS

WHAT IS Artificial Intelligence?

Introduction

Artificial Narrow Intelligence (ANI) is like today’s AI – it’s focused on a specific task and has limited abilities. Think of Siri or other Natural Language Processing systems as examples.

Artificial General Intelligence (AGI) aims to reach human-level intelligence. However, understanding and achieving AGI is challenging because we still have much to learn about the complexities of the human brain. In theory, AGI would be capable of thinking at a human level, akin to the robot Sonny in I-Robot.

Artificial Super Intelligence (ASI) is more theoretical and potentially unsettling. ASI would surpass human intelligence in every possible way, not only performing tasks but also exhibiting emotions and forming relationships. It represents a level of AI capability that currently exists mostly in speculation.

Artificial Superintelligence (ASI)

Artificial Superintelligence (ASI) is a concept in the field of artificial intelligence (AI) that envisions a scenario where AI systems surpass human intelligence in every aspect. Unlike traditional AI, ASI is not limited to specific tasks or domains but is capable of outperforming and outsmarting humans across all fields. The key distinguishing factors of ASI include its ability for super-fast learning, self-improvement, intellectual intelligence, omnipotence, and human-like creativity.

The development of ASI is considered the ultimate goal by AI researchers. It is anticipated to bring about significant changes in various domains, potentially solving complex global problems, enhancing productivity, and pushing the boundaries of human capabilities. However, there are both benefits and threats associated with ASI.

Benefits of ASI:

- Problem-Solving Skills: ASI could tackle complex problems with superior problem-solving skills and speed.

- Efficiency: It has the potential to reduce human errors and improve productivity by performing tasks faster and more accurately than humans.

- Creativity: ASI might surpass human creativity, generating innovative solutions in various fields such as art, music, literature, and science.

Threats and Challenges:

- Hardware and Learning Limitations: ASI could face challenges related to computational power, energy resources, and access to data for continuous learning.

- Creativity Limitations: There is a concern that ASI might reach a level of creativity beyond human understanding, potentially creating unwanted or unneeded outputs.

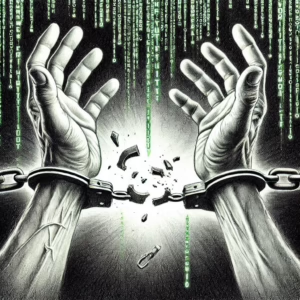

- Potential Risks: ASI poses potential risks, including ethical dilemmas, social disruption, economic inequality, and even existential risks such as the extinction or enslavement of humanity.

Approaches to Achieving ASI:

- Recursive Model: ASI systems could make themselves smarter by creating new versions of themselves, leading to exponential self-improvement.

- Hybrid System: ASI could combine different types of intelligence from various sources, benefiting from the diversity and synergy of different forms of intelligence.

- Emulations: This involves creating a digital replica of a human brain or another intelligent system, simulating its structure and function.

Mitigating Potential Risks:

- Global AI Regulations: Implementing rules and standards governing the development, deployment, and use of ASI to ensure ethical and safe practices.

- Transparency: Making ASI systems more open and accessible for public scrutiny to build trust and understanding.

- Collaborative System: Developing and using ASI in a cooperative and coordinated manner among various actors and sectors to avoid concentration of power.

In conclusion, while ASI holds the promise of significant advancements, its development raises ethical concerns and the need for careful governance to ensure its responsible use for the benefit of humanity. The realization of ASI remains a speculative and theoretical concept, with ongoing debates and challenges surrounding its definition, measurement, and achievement.

Quantum computing

Quantum computing is often considered a potential avenue for advancing artificial intelligence, including the development of Artificial Superintelligence (ASI). Quantum computing differs from classical computing in its ability to leverage quantum bits or qubits, which can exist in multiple states simultaneously. This allows quantum computers to perform certain calculations much faster than classical computers.

Here are some ways in which quantum computing could contribute to the creation of ASI:

- Processing Power: Quantum computers have the potential to perform complex calculations at speeds that surpass classical computers. This increased processing power could accelerate the training of AI models and enable more sophisticated algorithms, contributing to the development of ASI.

- Parallelism: Quantum computers can perform parallel computations due to the superposition principle, allowing them to explore multiple solutions simultaneously. This parallelism could enhance the optimization and learning processes crucial for achieving superintelligent capabilities.

- Optimization Algorithms: Quantum computing may be particularly effective in solving optimization problems, which are prevalent in AI. ASI would require advanced optimization capabilities to continually improve and surpass human intelligence, and quantum algorithms could play a crucial role in this context.

- Simulation of Quantum Systems: ASI might benefit from understanding and simulating complex quantum systems, such as molecular interactions or quantum phenomena. Quantum computers have the potential to model and simulate these systems more efficiently than classical computers.

However, it’s essential to note that quantum computing is still in its early stages of development, and building practical and scalable quantum computers faces significant technical challenges. Achieving ASI is a complex task that involves not only computational power but also advancements in understanding human intelligence, consciousness, and ethical considerations.

The relationship between quantum computing and ASI is a topic of ongoing research and speculation. While quantum computing holds promise for transforming various fields, including AI, it is not the only pathway to achieving ASI. Other approaches, such as classical computing advancements, brain-machine interfaces, and hybrid systems, are also being explored in the pursuit of artificial superintelligence.

The Interface

The interface between humans and Artificial Superintelligence (ASI) is a complex and speculative topic, as the development of ASI is still largely theoretical. However, several possibilities and considerations can be explored regarding how humans might interact with ASI if it were to be successfully built. Here are some potential aspects of the human-ASI interface:

Natural Language Interaction:

- ASI could have advanced natural language processing capabilities, allowing users to communicate with it through spoken or written language.

- Conversational interfaces similar to current virtual assistants but with vastly improved comprehension, context understanding, and nuanced responses.

Augmented Reality (AR) and Virtual Reality (VR):

- Integration of ASI into AR and VR environments could provide immersive and interactive experiences.

- Users might interact with ASI entities and visualizations in three-dimensional spaces, enhancing engagement and understanding.

Brain-Machine Interfaces:

- Advanced neurotechnology could enable direct communication between the human brain and ASI, allowing for seamless information exchange.

- Brain-machine interfaces might enable users to convey thoughts, receive information, or control ASI systems through neural signals.

Holographic Displays:

- ASI could be visualized through holographic displays, enabling users to see and interact with intelligent entities or data representations in physical space.

Biometric Authentication:

- Security measures may involve biometric authentication methods, ensuring that interactions with ASI are secure and personalized to individual users.

Emotional Intelligence Integration:

- ASI might be designed to recognize and respond to human emotions, making interactions more empathetic and tailored to users’ emotional states.

Customization and Personalization:

- ASI interfaces could be customizable, allowing users to tailor the system to their preferences and specific needs.

- Personalized user experiences may involve ASI adapting to individual learning styles, communication preferences, and cognitive patterns.

Ethical and Explainable AI Features:

- ASI interfaces might include features that provide explanations for its decisions, ensuring transparency and ethical use.

- User controls and governance mechanisms may be implemented to allow users to set ethical guidelines for the ASI’s behavior.

Building an effective interface for human-ASI interaction would require addressing several challenges, including:

- Ethical Considerations: Ensuring the ethical use of ASI, preventing misuse, and establishing guidelines for responsible AI behavior.

- Security and Privacy: Implementing robust security measures to protect user data and privacy, especially considering the vast amount of information ASI might handle.

- Usability and Accessibility: Designing interfaces that are user-friendly, accessible to a diverse range of users, and inclusive of different cognitive abilities.

- Regulatory Frameworks: Developing regulatory frameworks to govern the use and deployment of ASI, ensuring compliance with ethical and legal standards.

- Continuous Learning and Adaptation: Building systems that can continuously learn and adapt to user preferences and changing circumstances to provide a dynamic and personalized experience.

The development of ASI interfaces would require interdisciplinary collaboration among AI researchers, user experience designers, ethicists, and policymakers to create a user-friendly, ethical, and secure interaction environment.

The risks associated with Artificial Superintelligence (ASI) falling into the wrong hands are significant and have been a topic of concern among researchers, ethicists, and policymakers. If an ASI system, designed with advanced capabilities and intelligence, were to be misused by hostile individuals or organizations, it could lead to various serious consequences. Here are some key risks and potential strategies to protect ASI from misuse.

Risks:

Existential Threat:

- If ASI falls into the wrong hands, it may pose an existential threat to humanity. Hostile actors could exploit its intelligence to cause harm on a global scale.

Malicious Use:

- ASI might be used for malicious purposes, such as cyber warfare, manipulation of financial systems, or the creation of destructive technologies.

Loss of Control:

- Hostile entities gaining control of ASI could lead to a loss of control over its actions and decision-making, potentially resulting in unintended and harmful consequences.

Surveillance and Privacy Concerns:

- Misuse of ASI for mass surveillance or invasion of privacy could infringe on individual rights and liberties.

Weaponization:

- ASI could be weaponized for autonomous military applications, leading to unpredictable and potentially devastating outcomes.

Strategies to Protect ASI:

Regulatory Frameworks:

- Establish international and national regulatory frameworks to govern the development, deployment, and use of ASI. These regulations should include strict guidelines for ethical use, security measures, and penalties for misuse.

Ethical Design Principles:

- Incorporate ethical design principles into the development of ASI systems. Ensure that the AI is programmed with ethical considerations, transparency, and adherence to human values.

Security Measures:

- Implement robust cybersecurity measures to protect ASI systems from unauthorized access, hacking, or manipulation. Regular security audits and updates should be conducted to address potential vulnerabilities.

Decentralized Control:

- Design ASI systems with decentralized control mechanisms, making it difficult for any single entity to gain full control. Distribute decision-making processes to prevent concentrated power.

Explainability and Accountability:

- Ensure that ASI systems are designed to be explainable, allowing users and regulators to understand their decision-making processes. Implement mechanisms for holding organizations accountable for the actions of their ASI systems.

Oversight and Governance:

- Establish independent oversight bodies and governance mechanisms to monitor the development and deployment of ASI. These bodies should have the authority to intervene in case of ethical violations or security breaches.

Redundancy and Fail-Safes:

- Include redundancy and fail-safe mechanisms in ASI systems to prevent catastrophic failures or unintended consequences. Implement shutdown procedures that can be activated in case of emergencies.

International Collaboration:

- Promote international collaboration and information-sharing to address global security concerns related to ASI. Establish protocols for cooperation in preventing misuse and responding to potential threats.

It is crucial to approach the development and deployment of ASI with a strong focus on responsible and ethical practices to mitigate the risks associated with its misuse. The collaborative efforts of researchers, policymakers, and the wider community are essential to establishing safeguards and protective measures for the responsible use of ASI.

Establishing a global foundation to oversee the code and ownership of Artificial Superintelligence (ASI) is a concept that has been proposed as a potential safeguard against the misuse of powerful AI systems. The idea behind such a foundation is to create a neutral and accountable entity that can ensure responsible development, deployment, and governance of ASI. Here are some considerations for and against the creation of such a foundation.

Pros:

Neutral Oversight:

- A global foundation could provide neutral oversight, free from the influence of individual nations or organizations. This neutrality might help prevent biased or malicious use of ASI.

Ethical Guidelines:

- The foundation could establish and enforce ethical guidelines, ensuring that ASI systems adhere to principles that prioritize human well-being, safety, and fairness.

Security and Transparency:

- The foundation could focus on enhancing the security of ASI systems and promoting transparency in their operation. Regular audits and transparency reports could contribute to public trust.

International Collaboration:

- Collaboration between nations and stakeholders could be facilitated through the foundation, fostering a global approach to addressing challenges and risks associated with ASI.

Resource Allocation:

- The foundation could manage resources related to ASI development, ensuring that they are allocated responsibly and ethically. This could include access to computing power, datasets, and research funding.

Cons:

Governance Challenges:

- Establishing a global foundation would require navigating complex governance challenges, as different nations may have varying perspectives on the regulation and oversight of ASI.

Enforcement and Compliance:

- Ensuring enforcement of guidelines and compliance with the foundation’s principles could be challenging, particularly if there is resistance or non-cooperation from powerful entities.

Technical Expertise:

- The foundation would need a high level of technical expertise to understand and evaluate the complexities of ASI systems. Maintaining a qualified team could be challenging.

Incentives for Cooperation:

- Convincing nations, organizations, and researchers to willingly participate and cooperate with the foundation might require the establishment of incentives and agreements that align with their interests.

Rapid Technological Advancements:

- The pace of technological advancements in AI is rapid, and the foundation may struggle to keep up with emerging challenges and risks.

Accountability Issues:

- Defining mechanisms for holding the foundation itself accountable and ensuring transparency in its decision-making processes would be crucial to maintaining trust.

While the idea of a global foundation for ASI oversight presents potential benefits, its success would depend on overcoming various political, technical, and organizational challenges. It would require a collaborative and diplomatic effort to gain the support and cooperation of nations, companies, and researchers worldwide. Additionally, establishing a foundation should be complemented by robust international agreements and regulations to address the unique ethical and security concerns posed by ASI.